Hallucination

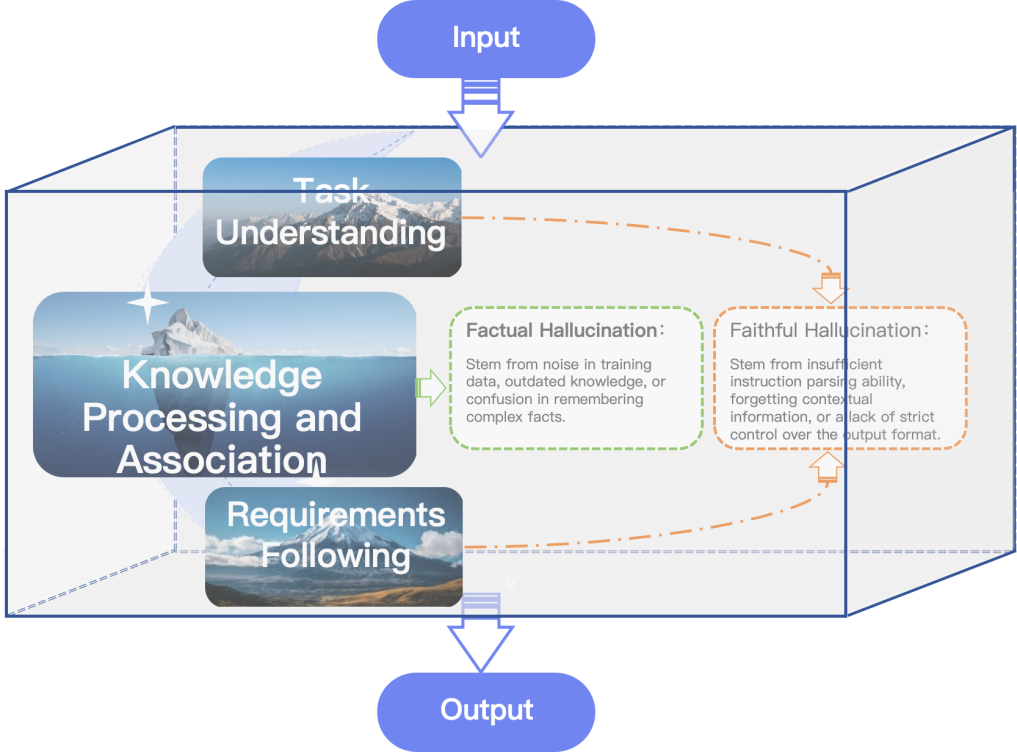

“Hallucination” refers to problems in LLM-generated content concerning factual accuracy or contextual consistency and can be divided into two categories: factual hallucinations and faithful hallucinations. Factual hallucination refers to content generated by LLMs that does not accord with real‑world information, including both incorrect invocation of known knowledge (e.g., misattribution) and fabrication of unknown knowledge (e.g., fabricating unverified events or data). Faithful hallucination refers to the LLMs’ failure to strictly follow user instructions or produce outputs that contradict the input context, including omitting key requirements, over‑extending beyond the prompt, introducing formatting errors, etc. To clearly present how hallucinations in LLMs arise and help readers better understand them, a brief schematic of their core elements is shown in Figure 1.